AI, artificial intelligence, was created from an ideal: make the machine intelligent in order to help humans in their evolution. Researchers, engineers, artists, philosophers, and prophets have taken turns in history to give their vision of the movements of artificial intelligence.

The intelligent machine is now unavoidable and technology is only evolving in this direction. But how did we arrive at such a technical deployment?

Let's look back at some key episodes in the history of artificial intelligence, between fiction and reality or reality joining fiction?

What is artificial intelligence?

Definition of artificial intelligence (AI)

Artificial intelligence, also called AI, corresponds to a set of software or connected objects that reproduce human reasoning through iterative, automated tasks. Artificial intelligence adapts to the information it receives to respond to the request received.

Artificial intelligence is linked to the ability to think and learn: over the years, humans have developed objects capable of learning from the data collected during the AI process.

In parallel with scientific research and technological advances, culture has gradually taken hold of the field of artificial intelligence to create novel characters, science fiction films, or even myths around the robot and the machine. The history of AI is rich in twists and turns, and today we’re only at the beginning of this revolutionary technology, which can already be found in some examples of artificial intelligence.

Detractors and transhumanists clash: either against or for, the currents of thought have helped and then slowed down the development of such a technology over time, before no longer being a question in the face of the magnitude of research and international issues.

The main characteristics of AI and its different types

Artificial intelligence was originally developed to help humans with repetitive tasks in order to make them automatic.

With technological prowess and technical advances, several degrees of intelligence have been optimized according to the tasks performed by this type of software.

Today, three types of artificial intelligence are considered:

- narrow artificial intelligence, having only a few capabilities;

- general artificial intelligence, which is considered to be at the level of human capabilities; and

- artificial superintelligence, consisting of capabilities considered superior to those of humans.

Weak or narrow AI is the artificial intelligence we know. Objects, configured software can only perform a single task, whether it’s driving a car, providing facial or voice recognition, or searching the internet. Although this artificial intelligence is very powerful, it’s limited to a single field of action.

Strong or deep AI, also called general artificial intelligence, is a type of AI that will compete with the human brain in its thinking and knowledge. This type of AI would be able to understand, think, and act with some initiative to solve problems. This software would therefore have complex emotions and reasoning, as well as unique beliefs and thoughts. To date, researchers and scientists have not yet succeeded in developing deep AI.

Artificial superintelligence goes beyond the imitation and understanding of humans: this stage of the development of artificial intelligence at its highest level corresponds to the utopia according to which the artificial neural network would be conscious of itself and its capacities would exceed those of humans. In the collective imagination, the machine could turn against humans overnight... This approach feeds cultural works and fantasies all over the world, as in dystopian science-fiction stories.

Although these concepts haven’t yet seen the light of day, they’re still necessary for humankind’s ambition against the machine. These novel ideas allow scientists to evolve the AI offering to higher concepts. A few years ago, today's inventions were still in the realm of utopia. Reality often meets fiction when it comes to AI, and the history of this technology proves this.

Who created artificial intelligence and when was it created?

How was AI born and how does artificial intelligence work?

1940–1960: The birth and creators of AI

It was between the 1940s and the 1960s that artificial intelligence and its technical developments were born. The acceleration was given after the Second World War and, at that time, a spotlight was put on AI.

The first objectives of this technology were based on the possibility of automating commands thanks to electronics with a first mathematical computer model. It wasn't until 1943 that the first artificial neural network was developed by Warren McCulloch and Walter Pitts. The first concept of artificial intelligence was born.

It was then the 1950s that took over the creation of AI as it would develop through the years. During this decade, many engineers worked on this project.

John Von Neumann and Alan Turing invented the technology that would be related to AI. Thanks to this, they created the starting point of the architecture of domestic computers: the concept of the computer was born on the basis of the capacity of the machine to execute a program configured by humans.

Alan Turing created the Turing Test, which is still relevant today: it questioned the ability of the machine to have a conversation with a human.

At the same time, the machine began to develop: in 1952, it could play chess, with the help of the first software created by Arthur Samuel.

The paternity of the term AI is attributed to John McCarthy (Stanford University), a term defined as "the construction of computer programs that perform tasks that are, for the moment, more satisfactorily accomplished by humans because they require high-level mental processes such as: perceptual learning, memory organization and critical reasoning" by Marvin Minsky (MIT). Research was conducted intensively during this period at various universities, including MIT, Carnegie-Mellon University (under the control of Allen Newell and Herbert Simon), the University of Edinburgh (Donald Michie), and in France with Jacques Pitrat, etc.

The year 1956 is considered the official starting point of artificial intelligence in the world because the expression was born during the Dartmouth Summer Research Project on Artificial Intelligence conference at Dartmouth College, a kind of workshop of reflection around AI led by six researchers including McCarthy and Minsky.

As the years passed, research in the field of artificial intelligence started to diminish. At the beginning of the 1960s, the hype around artificial intelligence was fading: the machine wasn’t yet capable of translating the desired technology. Not enough memory, lack of progress, results that are hard to come by... These factors pushed the scientific community and public authorities to detach themselves from the artificial intelligence project.

1970–1990: The first steps of today's AI

It wasn’t until the following decades that research into artificial intelligence began again, helped by the democratization of futuristic images in popular culture. In 1968, the film

2001, A Space Odyssey by Stanley Kubrick was released: an intelligent computer is one of the main characters. This mythical work proves that the public is more and more interested in scientific and technological discoveries of the future.

Although culture hasn’t forced science to advance, it’s made the sector more accessible to the greatest number of people, towards a renewed enthusiasm for this technology.

In 1965 and 1972, research conducted by MIT and Stanford University exploited artificial intelligence in the service of molecular chemistry and health. The foundations of today's artificial intelligence were laid: the machines created at that time were programmed as software imitating human logic, machines that could give responses to stimuli created by humans by entering specific data.

These inventions did not, however, motivate the public authorities to continue their investments in this field. The 1980s and early 1990s saw a new winter of artificial intelligence, with a project that would be put aside for a time. The technology as well as the programming required too many resources for not fast enough results.

Moreover, the research was focused on the development of the home computer. Artificial intelligence was therefore no longer a priority. A major event would nevertheless mark the 1990s, with the success of the IBM Deep Blue expert system. This software is known for having beaten the world chess champion Garry Kasparov. For the first time, an artificial intelligence was capable of such a feat. Even if this feat marked the history of artificial intelligence, the processor used was not the most convincing, as it only used limited functionalities, far from the futuristic and complex projections of a dreamed AI.

2010: The new generation of AI

The big difference that emerged in the AI system was related to massive data. The algorithm not only involved repetitive action but would be able to be equipped with the ability to learn.

In 2011, Watson, IBM's artificial intelligence, won games against champions of the game Jeopardy! In 2012, an AI from Google, Google X, was endowed with image recognition by recognizing cats in a video. This was the beginning of artificial intelligence as we know it today, namely software that’s able to learn and recognize objects on its own.

In 2016, Alpha Go, Google's artificial intelligence specialized in the game of Go, beat the European champion, then the world champion, before beating itself.

The change in the concept of artificial intelligence was permanent: now, it’s not a question of imposing rules on machines but of letting the machines discover the rules and learn thanks to the data collected.

Why was artificial intelligence created?

To create a machine that thinks

The objective of innovations has always been to increase the abilities of human beings. Thus, artificial intelligence is meant to allow human beings to increase all their capacities tenfold, based on various and complex fields of action.

Humans have invented objects that allow their attributes to be amplified, such as glasses to see up close or at a distance, a car to move faster and further, a microwave oven to cook food faster, etc. Artificial intelligence represents an extension of human intelligence. It’s a kind of holy grail of innovation because it combines all the most important human attributes in a single form of technology.

With it and through the years, technologies in the computer field related to artificial intelligence have become specialized according to the needs of the systems.

- Machine learning

This technology allows algorithms to learn by themselves from collected data. It’s the most widespread system today for processing information and transforming data into rational responses when talking about AI. Machine learning follows a 4-step process: the selection of training data for deep learning of knowledge, the selection of the algorithm to be executed, the training phase of the system via a repetition process, and the adjustment of the data until the final result. Machine learning allows for a high level of performance, towards the rational thinking of the machine...

- Deep learning

Deep learning projects go further in the approach of AI: here, robots are equipped with artificial neural networks, which allows them to reach a higher level of complexity in the processing of data. This technology has the ability to mimic human reasoning and make deductions, to accurately recognize objects, or to give a complex answer thanks to its learning capacity. Facial recognition, pathology recognition in medicine, or assisted driving are some of the fields equipped with this "deep learning."

The main purpose of using AI: to help humans

The purpose of artificial intelligence is multiple because, today, artificial intelligence is everywhere around us and pursues various trajectories. The main goal that gathers all types of AI on the market today is indeed to help humans in their daily life.

It doesn't matter what form the machine or the software that will help humans takes: its goal will be either to improve human skills, make data more easily and quickly accessible, or take care of complex tasks previously performed by humans. In any case, this universal tool is the most complete solution to support human beings on a daily basis.

How has artificial intelligence evolved?

Movies and culture: artificial intelligence in science fiction

The myth of Frankenstein created by Mary Shelley and published in 1818 can be related to artificial intelligence: a mad doctor creates a thinking creature with his hands.

The field of science fiction begins with the tale of Pinocchio by Carlo Collodi in 1881: a wooden puppet becomes "human" in the fact that he can think and feel.

In 1927, the film Metropolis portrays a society run by robots and AI. In 1968, Stanley Kubrick's film 2001: A Space Odyssey was also revolutionary: a computer is one of the main characters.

This futuristic domain is also widely present in the films of the Star Wars series or Star Trek.

In the 1990s and early 2000s, the theme accelerated in popular culture: A.I Artificial Intelligence by Steven Spielberg, the Matrix film series, Aliens, Terminator …

Artificial intelligence today in reality

Today, artificial intelligence is all around us: smartphones, computers, tablets, cars, public transportation, connected objects... Everything that’s part of a network is now equipped with artificial intelligence, to varying degrees.

Recommendation algorithms on platforms and social networks, hazard detection in cars, fall detection on a connected watch, voice recognition on voice assistants in our homes... All connected objects are equipped with AI. And the embedded software itself is also made of AI!

The automation of tasks is now at the heart of the technical evolutions desired by individuals and companies, to perform better and boost productivity, towards ever more results.

In this perspective, all businesses are innovating to be able to offer the best services to develop each acquisition lever.

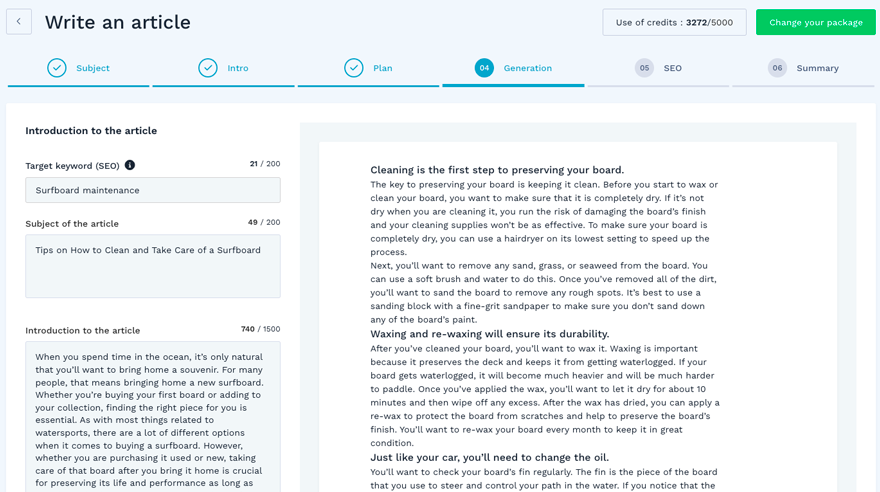

In ecommerce, competition is tough. If merchants don’t show reactivity and deep knowledge in all the marketing channels at their disposal, it’s difficult for them to stand out. With the desire to create an optimal ecommerce accessible to all, the WiziShop ecommerce solution is now equipped with artificial intelligence by creating the first platform integrating the automatic generation of text directly in its back office.

Here’s an overview of the performance of WiziShop's AI tool using GPT-3 technology: from a few pieces of information, text is automatically generated.

Launch yourself into the future of ecommerce with WiziShop: test the solution free for 7 days, discover the power of AI, and differentiate yourself from the competition.

Try WiziShop free for 7 days

THE EASIEST NO-CODE ECOMMERCE SOLUTION✅ No credit card required

✅ Access to all features

✅ No commitment