As a website owner looking to boost your site’s SEO, you’ll often be interested in the content of your pages, your internal linking, compliance with Hn tags, or even the acquisition of backlinks.

However, did you know that there are other levers that will give you a much deeper and more complete vision of your site’s performance?

In this sense, log analysis contains key data and information to help improve your SEO.

What are logs?

The logs are present in a file attached to the server of your website. They contain a significant amount of information, directly recorded by the server that hosts your site.

Each resource loaded on a page (css, image, javascript,...) generates a log line in the file.

The log files are thus composed of thousands of lines that are enriched every day with the different calls generated to the server (also called “hits”). These elements provide countless data to the site owner!

Each log line lists all kinds of information related to a site’s performance:

- time and date of the request (timestamp);

- IP address from which a request was sent to your server;

- URL requested;

- status code: 200, 301, 404, 500...;

- user agent;

- referrer, providing the previous page visited (excluding Googlebot);

- weight (page weight);

- response time; and

- HTTP protocol or HTTPs.

Why analyze logs in SEO?

You’re beginning to understand that logs contain a lot of data that can be used as part of an SEO strategy. John Mueller has also confirmed this!

@glenngabe Log files are so underrated, so much good information in them.

— \ud83e\uddc0 John \ud83e\uddc0 (@JohnMu) April 5, 2016

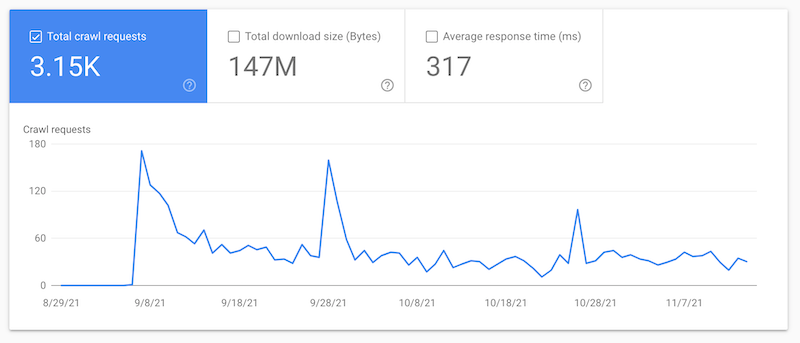

The main interest regarding the analysis of logs lies in the possibility of perfectly reconstructing Googlebot’s path on your site.

And yes, the IP displayed in a log line allows you to differentiate between search engine robots and organic visitors!

A log analysis of your site can help you to collect information on the passage of Google robots.

However, this doesn’t offer live data. The information reported is a few days late. Log analysis permits the monitoring of this data in real time!

The log analysis will allow you to do the following:

- Monitor your site: You get live information related to the exploration of your site (performance, HTTP codes, page weight, crawl volume...)

- Improve your site: You get concrete SEO actions to facilitate the passage of search engine robots (correction of 404 and 301 errors, improvement of page loading time and system security, detection of crawler traps with faceted filters or pagination...)

- Cross-reference data: The logs will give you access to very interesting data about your site, but you can go even further by cross-referencing this information with other elements, such as SEO crawler, sales revenue, page depth, etc.

How does Googlebot work?

The crawl budget

Google assigns a certain “crawl time,” also called “crawl budget,” to different sites.

This will vary according to different signals such as the popularity of the site, the freshness of the content, the number of pages, etc.

It’s this notion that will define the time that Google's robots will spend on your site.

The infrastructure behind this exploration requires significant resources for the search engine. Page crawling represents a certain cost.

By optimizing Googlebot’s path on your site, you allow the search engine to facilitate its path but also to save money!

Crawl budget and large sites?

The “crawl budget” is mostly associated with large sites. On a 100-page site, this notion is negligible.

However, be careful, as it’s possible that your site won’t be the same as the one seen by Googlebot. Crawler traps or “spider traps” are a typical example of a problem that can impact the crawl budget.

For example, a bad configuration of faceted filters, an external application, or an internal search engine can generate infinite URLs. It’s these URLs that will trap the robots.

The risk is that the robots will focus on these low-quality URLs and put aside your URLS that are beneficial for SEO.

Your site can therefore be thousands or even millions of pages long, without you even knowing it.

That’s why, by default, on WiziShop stores, sensitive pages (faceted filters, sorting filters, shopping cart, paginations, etc.) are efficiently managed to avoid these problems.

SEO information to retrieve with log analysis

What Google sees

This is one of the first pieces of information to analyze with the logs, closely linked to what I just stated above: Does Google have the same vision of the site as me?

As the owner, you know your catalog, your best products, and your business pages that bring you revenue.

By carefully analyzing Google's journey, you'll quickly see if it prefers certain pages on your site to others. The frequency of robot visits clearly highlights Google’s preferences.

So, is the search engine also focused on these important pages during visits?

If you notice that Google’s robots are focused on pages that are beneficial for your customers (contact page, pagination, filters,...) most of the time, it’s likely that you’ll have to make adjustments!

You must do everything possible to make sure that Google has the same vision of your site as you do.

The objective of this analysis is to verify that there is a coherence between the important pages of your site for your SEO and those that are frequently visited by Google.

The main reasons why robots leave certain parts of your site are often related to your internal linking or the depth of the pages.

HTTP codes

Monitoring and analyzing the logs will help you to identify the URLs of your site that respond well in code 200 but especially those that display error codes such as 301, 404, or 500.

URLs displaying code families 3xx, 4xx, or 5xx can frequently harm the crawling of your site by search engine robots but also the user experience.

With this analysis, you can easily detect these HTTP codes and implement corrections when necessary.

Loading speed

What could be more annoying than a web page that loads slowly?

In fact, if this is the case on your online store, it’s important to correct this performance problem because it’s one of the most discouraging elements for visitors and for conversions.

A page that doesn’t open can lead to all your traffic going to your main competitors...

This is especially important if your site is very slow, as it slows down the passage of Google robots on your site. They will therefore crawl fewer URLs.

For example, if each page of your site loads in 4 seconds and you manage to lower this indicator to 2 seconds, the robots will visit twice as many URLs!

Orphan pages

The analysis of logs can also be coupled with other data, such as the crawl of an SEO tool, to generate numerous analyses.

This is the case with orphan pages for example.

Orphan pages are pages of your site that are online and known by Google but aren’t attached to your site. No internal link points to them.

In this case, they appear in the log data because they’re visited by the search engine, but they aren’t detected during a crawl of your site.

It can even occur that these pages generate traffic and are quite well positioned! Imagine then if you integrate them again into your internal linking?

There are several reasons for the presence of orphaned pages. For instance, it may be that they’re old pages, formerly attached to your site, that have now lost their links. This may be the case for an out-of-stock product that you no longer list in your product categories but that is still online.

With cross-analysis of logs and other data sources and tools, you can obtain a significant amount of information that can aid you in improving the management and security of your online store.

site. The log file is a real gold mine, but you still need to know how to use it...

That’s why there’s a dedicated French tool that aims to make log analysis accessible to all: Seolyzer. And guess what? We recently made our partnership with this tool official on our ecommerce platform!

WiziShop is now the only SaaS solution to offer log analysis.

To take advantage of this new feature, enjoy a 10% discount on your monthly Seolyzer subscription by applying the code "wizi" when you sign up.